Introduction

After long, intensive hours, I finally have my SecurityCam in a state that I would like to introduce to you. I use cutting-edge technologies that are worth taking a closer look at. But before I start with the Technology-DeepDive in a multi-part report, I would like to give you my motivation. Maybe you can find yourself in it?

Why a smart SecurityCam

Quite a long time ago, I wanted to know what was going on on my doorstep. But especially I would like to know when the postman arrives or when he was there. If you have young children who should also have a nap in the afternoon, you know that. Nobody wants them to be awakened by a shrill bell. What our postmen usually can’t (or don’t want to 😈).

So I simply wanted to build a system that informs me that the postman is entering my courtyard driveway. Doof only that most systems of SecurityCams do not know what a postman is. That is why I have tried my own approach. .. and that came out of it:

This multi-part article is full of technologies that I would like to explain to you in more detail. Perhaps the list below will make you curious.

- Azure IoTHub

- Azure IoTEdge

- Computer Vision API

- Custom Vision API

- Tensorflow

- Azure Blob Storage

- Jetson Nano

My hardware setup consists, roughly described, of a Jetson Nano (an embedded device from NVidia), connected via Ethernet (but can also WLAN) to the Internet and a WebCam. Since machine learning always needs a little more power and thus also ensures temperature, I have attached a fan to it.

I have sketched the software-side components as a high-level architecture. In it, the Jetson Nano runs as an edge device and is connected to Microsoft Azure via the IoT Hub (Cloud Gateway). A loudspeaker enables machine-human communication.

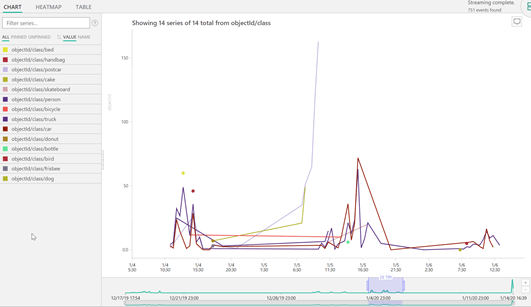

The nano can operate offline, but can also communicate with the cloud when the Internet connection is available. The Jetson Nano analyzes the images that a WebCam provides. Any object that is detected is cut out and placed in local storage. If the system detects a post bus, the message “I see a post bus” is displayed simultaneously on a loudspeaker. If the Internet connection is intact, the detected objects (image snippets) are transferred to the online storage and deleted locally. At the same time, the event data (when which object was sighted) is streamed to the cloud as time sequence data. The following image shows the incoming events in a filterable chart.

And, are you curious? Then, in the next post, he continued: “

Custom Vision – I recognize the Postbus“. In this article, I describe how I built a machine learning model for recognizing a postcar.